Contemporary business is data-driven, so companies that fail to make optimal use of their data are at an enormous disadvantage. Data fragmentation can limit the utility of an organization’s data by making it less accessible, less consistent, and less accurate. It also makes it much more difficult to manage and more expensive to maintain.

Data fragmentation is common to all computing systems, and no company can avoid it entirely. But excessive, uncontrolled fragmentation can seriously impair a company’s data processing operations, undermine its competitiveness, and limit its opportunities to grow. Fortunately, there are numerous ways to control data fragmentation and improve data quality, helping businesses fully leverage their digital assets.

What Is Data Fragmentation?

Data fragmentation occurs when a single file or piece of data is stored in multiple locations. The data can be dispersed in main memory, on a single disk or hard drive, or on multiple data storage sites, either on-premises or in the cloud. This can result in corruption of the data and inefficient use of system resources.

When organizations distribute large databases across multiple devices and locations, they are essentially using one or more approaches to deliberate data fragmentation. This is especially useful for organizations with very large amounts of data, as it can reduce their total storage requirements while making it easier to manage their data and add new storage capacity as needed.

Even if intentional, it is imperative to plan carefully and optimize data fragmentation plans to avoid potentially serious consequences.

Key Takeaways

- Data fragmentation makes data less useful and drives up compute and storage costs.

- Correcting fragmentation boosts productivity and sharpens day-to-day decision-making.

- There are multiple ways to resolve fragmentation issues, including a mix of data architecture, governance, and tooling strategies.

- Ongoing data-quality monitoring, AI-driven anomaly detection, and periodic audits can help prevent fragmentation from creeping back in.

- Unified cloud platforms—such as an enterprise resource planning (ERP) system coupled with a cloud data warehouse—centralize operational and external data, eliminating silos at the source.

Data Fragmentation Explained

When a computer’s processing unit loads and unloads data from memory, it breaks it into chunks. As these chunks are retrieved and stored, they are spread across a disk or several disks. While this can allow for more efficient data processing and reduced network traffic, especially with a cloud-based distributed database, it can also waste storage capacity and reduce overall system performance. Over time, as the process is repeated again and again, the data becomes more dispersed and the potential for inefficiencies and data corruption increases.

Types of Data Fragmentation

There are two basic types of data fragmentation: physical and logical. Physical fragmentation refers to data scattered across different locations on the same disk, or across different disks and storage devices. Logical fragmentation occurs when data is duplicated or partitioned across different applications or systems, which often leads to incompatible or inconsistent versions of the same data.

Here’s a more detailed look at the different ways in which data can be fragmented:

- Horizontal fragmentation: Refers to splitting the rows of a database table into several subtables that follow the same format, or schema, but are stored in different locations. Horizontal fragmentation is used to improve the performance of a distributed database, especially when dealing with very large sets of data. However, it can also lead to decreased performance, due to greater data scattering and more intensive processing requirements—plus, data integrity becomes harder to maintain.

- Vertical fragmentation: Divides the columns of a database into separate groups and then places them in different locations. This type of fragmentation is used to optimize database performance by placing columns that are frequently accessed together in the same location. The technique reduces the volume of data that has to be retrieved for each query. But, as with horizontal fragmentation, breaking apart the columns of a database increases system complexity and often requires additional resources, creating more potential points of failure.

- Mixed or hybrid fragmentation: Combines horizontal and vertical fragmentation to accomplish the same ends, but to a greater degree. Strategically locating where data is stored makes data queries more efficient and reduces network traffic. System complexity, however, is also increased, as are data scattering, resource requirements, and potential failure points. The latter include increased risk of data duplication and data inconsistencies across fragments.

- Database-level fragmentation: Refers to distributed database data that isn’t stored contiguously but scattered across multiple locations instead. When the logical organization of the data doesn’t align with its physical order, inconsistencies are created that lead to inefficiencies in how the data is stored and retrieved.

- Storage-level fragmentation: Also referred to as disk fragmentation, this is what many people think of as data fragmentation. Storage-level fragmentation occurs naturally within a computer system, as data files are repeatedly retrieved, modified, and deleted. When the new file is too big for its original disk space or when the space created by deleting a file is too small for a new file, the file is broken into fragments and dispersed across the disk. Since more computing operations are required to retrieve these files, disk fragmentation can degrade system performance—especially when the files in question are very large or frequently used.

- Application-level fragmentation: Occurs when different applications use different formats to store data, making it more difficult or impossible for an application to access and use data that’s been stored by another application. While fragmentation at the application-level can sometimes make it easier to store and manage certain data, it is mainly detrimental, creating data inconsistencies and preventing one application from sharing data with another.

- Organizational fragmentation: This creates data silos that prevent one department or business function from sharing its data with others. It occurs when different parts of the organization use different applications that store their data using unique formats—instead of using one standardized format for the entire enterprise. When this happens, it becomes much more difficult for one part of the business to share data with another, undermining interdepartmental coordination and teamwork.

What Causes Data Fragmentation?

Ungoverned data fragmentation creates inefficiencies, degrades performance, and interferes with business operations. The causes are numerous:

- Using multiple storage locations: There are many good reasons for a company to spread its data among different devices both on-premises and in the cloud. But using multiple storage locations also introduces more potential points of failure, making the prospect of data redundancies, inconsistencies, and corruption much more likely.

- Siloed systems: As described above under application-level fragmentation, siloed systems arise when different applications use different formats to store data. Departmental databases are isolated from one another, interfering with data sharing and organizational cohesion.

- Inconsistent data standards: Poor data governance often yields inconsistent data standards, which is a major cause of organizational fragmentation. When different data formats, structures, and schemas are employed across a company, one part of the business can’t readily share information with another, making it much more difficult to arrive at a single trusted version of corporate data. This interferes with the ability of the entire enterprise to act in concert.

- Mergers and acquisitions: These can also lead to organizational fragmentation, since the acquired company may rely on databases that format information in an entirely different way from those used by the acquirer. With data sharing difficult or impossible, the process of integrating the new business into existing operations can be greatly hindered.

- Legacy systems: Another source of organizational and application-level fragmentation, legacy systems often employ outdated data formats and technology. Since they can’t be readily integrated with newer systems, they must operate as islands unto themselves, unable to share data with the rest of the organization—at least not without complex and expensive data integrations.

- Unstructured data: Growing organizational reliance on unstructured data, such as videos and teleconferencing recordings, also contributes to fragmentation. This is hard to avoid, since the apps that generate this data typically store it in diverse, unmanaged formats that can’t interact. The challenges posed by unstructured data are mounting at many businesses, as nontextual forms of presenting and communicating information continue to gain popularity.

- Poor data governance: This is frequently the chief underlying reason that organizational fragmentation develops. Without proper policies and standards in place, data mushrooms out of control, shoehorned into whatever formats and schema are deemed expedient by the different groups that hold it. Redundancy and inconsistency rule the day, and the reliability of the data can no longer be trusted.

- Decentralized data ownership: This goes hand in hand with poor data governance. Strong governance demands that every data source has a designated owner responsible for maintaining the data’s integrity and adhering to clear, enterprisewide data standards. When there is no established owner and multiple teams manage the same data independently, consistency cannot be maintained. This is a recipe for organizational fragmentation and the disharmony it creates.

Problems Caused by Data Fragmentation

When data fragmentation is unplanned and unchecked, a panoply of problems arises that can impede a business. These include:

- Performance issues: Longer query times and slower processing stemming from more complex data retrieval diminish overall system performance. These extend turnaround times, undermining workplace productivity, delaying time-sensitive reports, and backlogging urgent requests.

- Data inconsistency: When an organization finds conflicting and unreliable information in its systems, it can’t trust its own data. This can paralyze decision-makers, freeze new initiatives, and stall growth.

- Increased storage costs: At the very least, out-of-control fragmentation increases storage costs, since it leads to numerous redundancies and inefficient use of disk space. If the causes of excessive fragmentation aren’t identified and addressed, an organization will be forced to pile on additional costly storage resources to maintain normal operations.

- Security risks: Fragmentation also increases an organization’s vulnerability to breaches and unauthorized access. This is especially true of data packet fragmentation, which occurs whenever a packet exceeds the maximum transmission unit of a network, but it can also be due to file fragmentation. Fragmented packets and files are harder for cybersecurity programs to track, and hackers can exploit this to bypass security controls by parsing their malware into smaller fragments. Similarly, organizational fragmentation tends to increase security risk. Data silos and poorly integrated security tools make it much more difficult for security professionals to obtain a comprehensive threat picture and to enforce across-the-board safeguards and policies.

- Complex data management: When data is widely scattered, data management becomes substantially more complex. This creates additional resource overhead and renders data integrity harder to maintain.

- Reduced decision-making accuracy: As noted above, when organizational data is widely dispersed and inconsistent, it is no longer trustworthy. Managers cannot be certain that their decisions are based on reliable information, forcing them to take their best guess or postpone deciding. Either way, the business suffers from less timely and accurate decision-making.

- Compliance challenges: Remaining in compliance also becomes more challenging, since so many government and regulatory requirements involve maintaining consistent records that can be easily accessed, along with digital paper trails that show the original source of the data.

- Limited scalability: Data fragmentation can lead to inefficient resource utilization and excessive system requirements that limit an organization’s ability to grow. This is due to inefficient data handling, which hinders data sharing and system expansion. In other words, unchecked fragmentation can, on one hand, cause a business to waste resources and prevent it from dialing up its resources to meet new business requirements on the other.

Benefits of Fixing Data Fragmentation

With so many disadvantages associated with ungoverned data fragmentation, it stands to reason that bringing fragmentation under control has many advantages. Indeed, it does, in the following ways:

- Reduced costs for equipment and personnel: When data management is centralized and data scattering reigned in, there is not as much file redundancy and data duplication. This means less disk space and fewer computational cycles are needed, and less bandwidth is required, which translates into lower equipment expenditures. Data consolidation and simplified data management also mean that fewer IT specialists are needed to maintain databases, perform queries, and ensure data integrity. Companies can either trim their IT staffs or reassign personnel to higher-value projects.

- Better data quality and consistency: Reduced data diffusion across disks and devices reduces errors and improves data integrity. Data consolidation among departments and functions reduces redundancies and improves data accuracy. Introducing enterprisewide data standards and formats eliminates silos and allows data to be utilized throughout the organization.

- Improved decision-making: With consistent, reliable data readily available, line managers can confidently use it to make better decisions. Plus, upper management can obtain a 360-degree view of the company, paving the way for greater integration and operational effectiveness. This read access to data, combined with shorter query times and timelier report generation, truncates the decision-making cycle. A 360-degree view of the business means management can spot key patterns and market trends more easily, which facilitates faster and more accurate decisions that can elevate the company’s competitive posture and improve its strategic position

- Increased productivity and efficiency: For IT managers and data analysts, constraining fragmentation streamlines the extract, transform, and load (ETL) process, significantly reducing the time and effort required for data reconciliation and integration. For business managers, the ability to quickly find and access data from anywhere in the enterprise means less time spent searching for information and more time available for analyzing and acting on that information. Moreover, with all managers and teams able to access and utilize the same set of data, barriers to information sharing and collaboration fall away, since everyone is now on the same page.

- Enhanced security: Less fragmentation means less data loss and corruption. And since the data is easier to manage and control, it becomes easier to monitor and protect. Integrated security tools, meanwhile, provide a more comprehensive view of the threat landscape, reducing the risk of a data breach or successful malware attack.

- Improved compliance: Adhering to government and regulatory requirements is all about transparency. Regulatory bodies want to know where certain information has come from, where it has gone and how it’s protected. They also want to verify its accuracy. Defragmenting data and dismantling data silos create a unified data environment, so it is easier to keep tabs on different types of information. Every piece of data has its own digital paper trail, which greatly simplifies the challenge of demonstrating compliance with industry regulations and standards.

How to Detect Data Fragmentation

Since some degree of data diffusion occurs naturally within all computing systems, the question becomes one of degree: How do you determine when you’ve crossed the line from normal fragmentation to excessive, detrimental fragmentation? There is a number of techniques to employ:

- Data mapping and inventory: This is the starting point for effective data management. A data inventory involves assembling a catalog of all of an organization’s data assets, including the type of data that’s collected, where it’s stored, and how it’s used. Data mapping aims to provide a visual representation of the data inventory. The process can be a painstaking one, since it involves tracking and documenting all the data elements within an organization, including data sources, data fields, databases, and data warehouses. The roadmap that’s produced depicts how the data moves and changes across all organizational systems and departments, including any data exchanges that take place with customers and vendors. Data maps provide an overview of all data generated by, and that flows through, a business. They show where the data originates, how it’s used, and where it goes. By revealing inconsistencies, redundancies, and discrepancies between different data sources, data mapping is a very basic and effective way to detect data fragmentation.

- Data redundancy analysis (RDA): RDA is a statistical technique used to determine the relationships among different sets of variables. Data redundancy occurs when the same set of data appears in more than one table or database. It wastes storage space and leads to inconsistencies among different versions of the same data, making it unreliable. RDA can be used to identify and remove redundancies, reducing the amount of data that needs to be stored and processed, while improving its consistency and quality.

- Cross-system integration testing: Also known as system integration testing (SIT), this is used during software development to ensure that the different components of an application or system interact and function correctly. Elements of data handling covered by SIT include conversions among different data types, validation rules for data integrity and consistency, and error-handling for failed data exchanges. When data is diffused across a system or organization, SIT can be used to determine if the data fragments are interacting properly and can be reassembled and processed correctly. The technique is particularly useful for identifying data loss or corruption.

- Data quality audits: Conducted by either internal or external auditors, data quality audits are used to assess the completeness, accuracy, and consistency of a company’s data. Audits can uncover data fragmentation issues, including data duplication, missing or incomplete records, and inconsistencies between departments or systems. An audit can also help pinpoint the source of the problem, especially when it pertains to an organizational issue, such as a lack of data governance or system integration challenges.

- Metadata analysis: Metadata is data about an organization’s data, including how it’s structured, formatted, and the schemas to which it adheres. It can be used to extract information, such as the origin of a file, its creation date, and how frequently it’s accessed. This type of data analysis can then be used to help determine why data is becoming fragmented and the best ways to prevent it. For example, by comparing the metadata from different versions of a file, a database manager can spot discrepancies and inconsistencies that indicate whether the file is fragmented, duplicated, or both. The manager can also use the metadata to identify inconsistencies in data governance policies that might be causing the fragmentation.

- ETL and query performance monitoring: ETL is a process for integrating data from multiple applications and systems into a single, unified format. Query performance monitoring analyzes various database metrics, such as response times and resource utilization, to gauge query efficiency. ETL can be used to spot missing values, duplicate records, and inconsistencies in the data, while slow query times and excessive resource usage show up during query performance monitoring. All of these are indicative of fragmented data, and, used in tandem, the two techniques present an effective way to flag fragmentation issues and identify their causes.

- User access and usage patterns: Analyzing this data can unearth fragmentation issues that serve as barriers to productivity and optimal application performance. Analyzing user access patterns, for instance, sheds light on the various ways in which data is stored and retrieved by different departments and systems. This can reveal where information may be siloed and inaccessible to certain groups, undermining collaboration between teams. This approach is also well suited for identifying data irregularities and redundancies, which, together with information silos, are hallmarks of application and organizational fragmentation.

- Automated data lineage tools: These are used to track data from source to destination. They allow an organization to map out its data flow and determine the points at which fragmentation takes place. By tracing data flows and transformations, organizations can pinpoint the root causes of data quality problems and take corrective actions promptly. Data lineage lets data owners know what changes were made to the data, which is important for determining if data quality standards were followed. Or they can track who accessed data, which can help them evaluate the data security requirements associated with their data governance policies. The more visibility a business has into its data’s lineage, the greater its ability to develop and enforce data governance requirements that prevent fragmentation and preserve data quality.

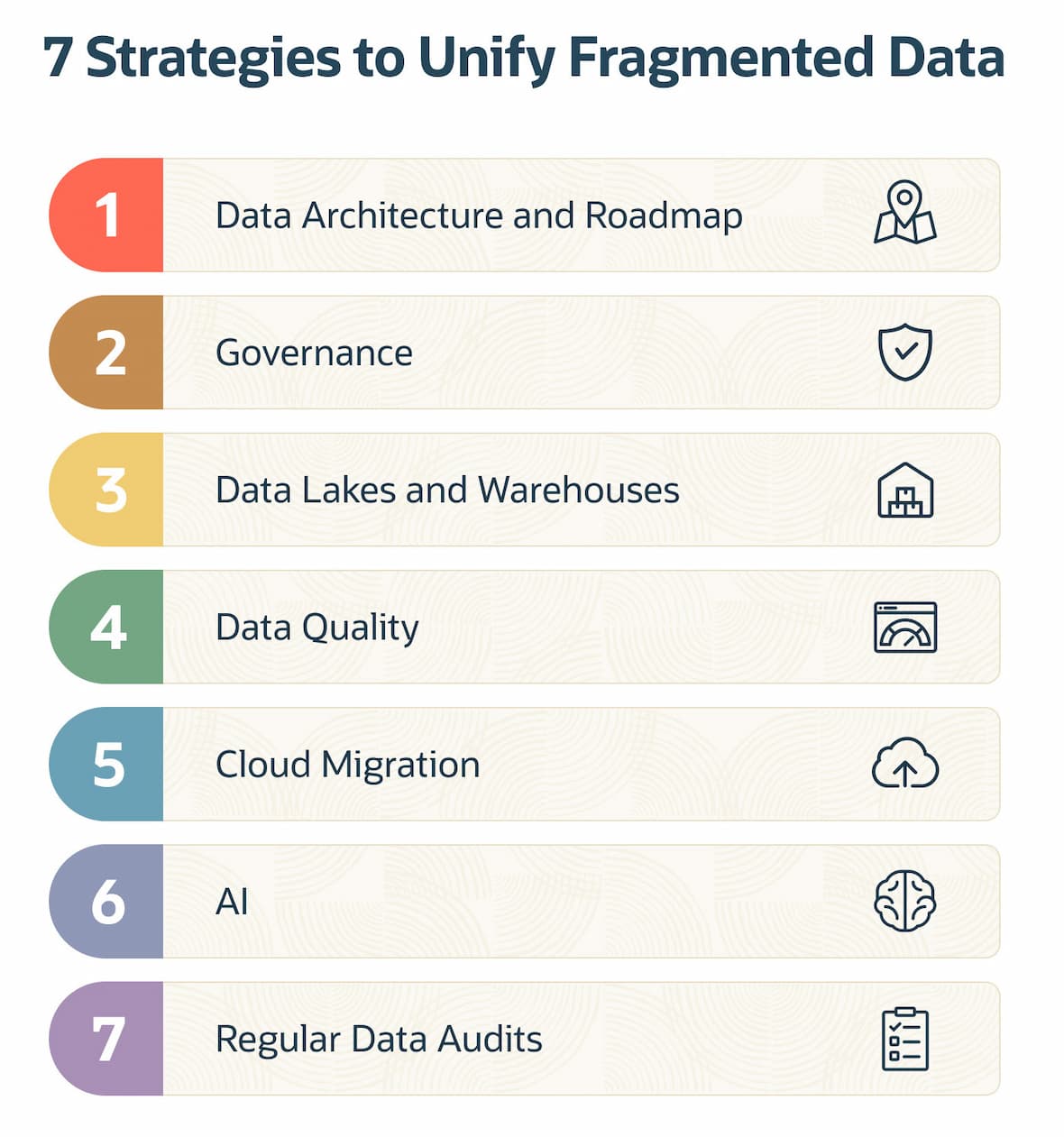

7 Strategies to Solve Data Fragmentation

Data fragmentation can impair virtually any business. Within the heavily regulated financial services sector, for instance, fragmented data can lead to customer privacy concerns and noncompliance issues. In healthcare, fragmentation can result in misdiagnoses and lead to unnecessary procedures. For retailers, scattered inventory, sales, and customer data can cause costly missteps that undermine customer satisfaction and derail financial performance.

To reduce these and other risks, companies need to closely monitor and reduce data errors derived from fragmentation. Here are seven different organizational strategies to employ:

- Adopt a data architecture and roadmap: Companies should have a documented data architecture and roadmap to effectively manage their data in a consistent fashion. These should be primarily based on business priorities, not technical considerations. They should take into account the business’s current and potential future sources of data, as well as the way the data is likely to be used. Growth opportunities and the role of new and emerging technologies should all be considered. The resulting overview of how the business generates and utilizes its data provides a foundation for identifying and controlling fragmentation-related issues. A growing number of organizations choose to reinforce that architectural foundation by rolling out an enterprise resource planning (ERP) system. ERP solutions can unify finance, inventory, order management, and other core functions in one central data repository, supplanting a patchwork of point solutions that scatter data across the business.

- Define appropriate data governance policies: Based on the architecture and roadmap, establish and enforce clear and consistent policies for how data is to be acquired, stored, formatted, and shared across the organization. These policies should delineate the way data is to be accessed and used and designate the owner responsible for maintaining and protecting it. Strong data governance breaks down data silos and provides a framework for maintaining data integrity and consistency.

- Consolidate disparate data in data lakes and data warehouses: Diverse types of structured and unstructured data can be stored, cleaned, and formatted in a data lake or a data warehouse so the data can then be searched or analyzed in aggregate. Both types of repositories minimize fragmentation and eliminate silos, supporting greater collaboration and teamwork, as well as more effective decision-making.

- Employ data-quality monitoring tools: These tools measure and evaluate the accuracy, completeness, consistency, and timeliness of a company’s data to help ensure that the data meets the standards set by the organization and is suitable for its intended use. The monitoring process can be automated and used to help both clean and validate data, thus identifying issues before they lead to greater fragmentation.

- Migrate data to the cloud: Cloud repositories impose a certain discipline on an organization that helps resolve organizational fragmentation issues and eliminate data silos. Cloud platforms are not only flexible and scalable, they also provide ready access to a wealth of tools for managing data quality and correcting data diffusion. During the cloud migration process, standards are set, storage is centralized, and disparate systems are integrated. This breaks down data silos and supports greater consistency in data governance. Data accessibility is improved and more sophisticated analytics can be conducted.

- Use artificial intelligence: With AI, an organization can automate its data monitoring and conduct regular data quality checks. AI can help quickly and efficiently discover inconsistencies and hidden anomalies within data, identify counterproductive usage patterns, and suggest ways to minimize fragmentation risks.

- Conduct annual or biannual data audits: Regular audits are another excellent way to identify and correct discrepancies within an organization’s data. Audits spot errors and inconsistencies, flag duplicated and incomplete records, and highlight missing information. A properly conducted audit will not only reveal where fragmented data is interfering with data quality but will also shed light on the source of the fragmentation.

In addition to these strategies, there are a handful of more nitty-gritty technical tactics companies can use to reduce their fragmentation issues. These include:

- Selecting a data format that’s well suited to the firm’s business requirements and can be used by all—or at least most—of the applications in the company.

- Conducting regular disk maintenance, including hard drive defragmentation, which rearranges files to minimize data diffusion. Also delete unnecessary, outdated, and redundant files and be sure to maintain free disk space at between 15% and 20% on each drive, which will improve the drive’s ability to manage file placement and limit data scattering.

- Converting to solid-state drives (SSDs), which generally don’t require defragmentation. SSDs are also usually more durable than their magnetic disk counterparts.

Avoid Data Fragmentation With NetSuite

Data silos, duplicate records, inconsistent formats—these are the results of data fragmentation that impede business growth. NetSuite’s cloud-based ERP tackles these problems at the source by running your accounting, inventory, supply chain, order-to-cash processes, and more on one unified data model. Every transaction is captured once, in one place, so teams can stop reconciling spreadsheets and start trusting their common operational and financial data. Still, today’s businesses also need to blend operational data with information from ecommerce platforms, marketing systems, third-party apps, and other external information sources. NetSuite Analytics Warehouse makes that straightforward: It’s a cloud-based data warehouse that can automatically pipe NetSuite records—and virtually any external data feed—into an Oracle-powered repository and can then apply AI-driven data-quality checks. It can produce cross-functional dashboards in minutes.

Together, NetSuite ERP and Analytics Warehouse can prevent new data silos from forming, cleanse legacy data inconsistencies, and give leaders the end-to-end visibility they need to make sound decisions, at speed—no custom integrations or manual defragmentation required.

Data fragmentation is a natural occurrence within every computing system and sometimes is used deliberately to distribute data more efficiently. But ungoverned and unchecked, data dispersal can waste resources and impair data quality. This can prove costly for companies and can undermine their competitiveness and limit their growth. Fortunately, there are many ways to identify and correct detrimental fragmentation, beginning with data mapping and the adoption of sound data governance policies. Implementing these and other strategies can protect the integrity of an organization’s data, ensuring that it can be used in the most advantageous way.

Data Fragmentation FAQs

What is the difference between data fragmentation and data replication?

Data fragmentation occurs when a single file or piece of data is broken into smaller pieces and stored in multiple locations. This can improve storage efficiency and data access, but it can also result in data corruption and the inefficient use of system resources. Data replication, on the other hand, is the creation of identical copies of the same data that are stored at different locations. Replication improves query times and system performance, and it provides a buffer against system failures and data corruption. But it also requires more storage space and can lead to inconsistencies between different versions of the same data.

What is the problem with data fragmentation?

Some degree of fragmentation occurs within all computer systems. But when it is ungoverned and unchecked, it can result in diminished system performance, longer query times, and slower processing. Fragmentation can also lead to data inconsistencies and corruption and less efficient use of system resources, which increases network and storage costs.