By Art Wittmann, editor of Brainyard

⏰ 7-minute read

You’re reading Art of Growth, a twice-monthly column by Art Wittmann. See previous posts (opens in new tab).

Cool people at cool dinner parties talk about cool things as though they’re second nature. Summers in the Greek Islands, photo safaris to Africa, paddling the Amazon in a kayak. What Chris Hemsworth eats for breakfast. Cool people just know and do more cool stuff than the rest of us.

Nerd-cool people may wax eloquent about the “transformative” nature of AI (opens in new tab) or the “pervasiveness” of big data (opens in new tab): “It’s everywhere, you know. My entire portfolio is dedicated to AI and big data unicorns.”

Riiiiight.

The more skeptical among us wonder if Chris didn’t recently spill his secret pancake habit on Instagram. And all those AI unicorns? We can’t seem to find them. At least not yet.

Now, if it were just at dinner parties where the tech illuminati uttered such quips to one another, it would be merely annoying. But it’s gone much further than that. Marketers and journalists (opens in new tab) who should know better have played fast and loose with both terms to the point where business owners might feel they’re being left behind if they don’t dabble in either technology.

Better buy some AI, right?

A vanishingly small number of emerging businesses have big data problems, much less need AI. Yet in my last job, I saw loads of companies pitching “AI” and “big data” solutions that didn’t exist, if you consider the true meaning of those terms. What those sales teams usually meant was that, if a potential client had more data than it knew how to analyze, they had products and services that could help. Innocent enough, I suppose. Calling it a “big data problem” -- the client got to feel like its problem was bleeding-edge, and the vendor got to put itself in rarified data analysis air.

Some marketers stretch the definition of "AI" and "big data." Don't be that guy.

What is AI, exactly?

Marketers, salespeople and other well-meaning exaggerators (opens in new tab) might also plead not guilty because there is no widely-accepted definition (opens in new tab) of “big data,” and the meaning is a moving target. What was big data in 2005 isn’t in 2020. Here’s the reality: Big data sets are those that can’t be handled by the usual data analysis and management tools. So, not spreadsheets and not relational databases.

Microsoft says that (opens in new tab) the 32-bit version of Excel can handle data sets in the gigabytes, while the 64-bit version can digest terabytes. Most RDBMS products (opens in new tab) claim near petabyte capacity. That’s a lot of data, so if big data is a dataset that can’t be handled by these tools, just how big does it have to be to be big?

Size is only part of the problem in defining what is and isn’t “big data.” Just as important is variability. A lot of the data we create now doesn’t lend itself to structured storage. Think images, video, audio and data produced by all sorts of sensors and instruments, the queries we type into search engines and the posts we make on social media sites.

Commonly, big data definitions invoke the three Vs, (opens in new tab) and so far, we’ve talked about two of them: volume and variability. The third is velocity, which means that your dataset is continuously growing, often nonlinearly — and that too can frustrate data management tools. In practical terms, if you have fast-growing data sets near petabyte size, and the data involved is hard to process without a team of developers writing custom code, then you have a big data problem. If not, there’s likely an easier way to analyze your data.

You can identify big data using the "three Vs": volume, variability and velocity.

Big-data processing systems like Hadoop (opens in new tab) work by slicing up big, complex data sets and sending those slices out to tens, hundreds or thousands of servers that process information, then forward results back for further processing or presentation. The processing that happens at those servers is custom-developed (again, by programmers) based on the data and what you’re looking for within it. This is how Google can tell you in a fraction of a second that your query found millions of matches and that it’s now showing you the Top 10. Lots and lots of servers got your query, found a few matches in their data, then sent them back to be collated and presented using algorithms that judge the useful responses based on previous similar requests.

Makes you feel kind of special, doesn’t it?

In fact, Hadoop and its commercial competitors aren’t so much big-data processing systems as they are big-data organizing systems. It’s up to humans to do the programming. And this gets us to the current working definition of artificial intelligence (AI).

Big-data processing systems comprise oodles of individual servers that process information.

It’s not AI if it doesn’t learn ...

Since the 1950s (opens in new tab), it’s been the goal of artificial intelligence to get computers to think like people. When dealing with large complex datasets like images and audio, our brains are faster than computers and purpose-built for parallel processing. Because the computer systems of last century lacked the raw power to work like our brains, programmer smarts substituted for massive computing power.

Today, we have machine learning (ML), and that’s a huge step toward true AI. With machine learning, big data parallel processing systems, usually called neural nets, look at lots and lots of data and learn from it. You can show the ML system a few hundred thousand English-to-French translations, with the goal of teaching it to translate (opens in new tab). The more examples of how to do something right you show the ML system, the more it refines its algorithm to get better. It’s like when babies learn to talk. They mimic what they hear, and when they get the sounds right, we provide positive feedback. (Say dada!) Eventually, you can’t shut the kid up. The idea is about the same with neural networks.

Machine learning (ML) is a huge step toward artificial intelligence (AI), but it's not the same thing.

Machine learning falls short of true AI because it has a hard time with context. If you’re trying to teach your ML system to write, you could show it lots of contemporary fiction and non-fiction books, newspapers and web articles, and it might start to do a pretty good job. Then throw it Chaucer and Shakespeare, and watch it fall apart. Give it some military manuals or scientific papers, and it’ll be hopelessly lost in the seas of jargon and arcane writing styles.

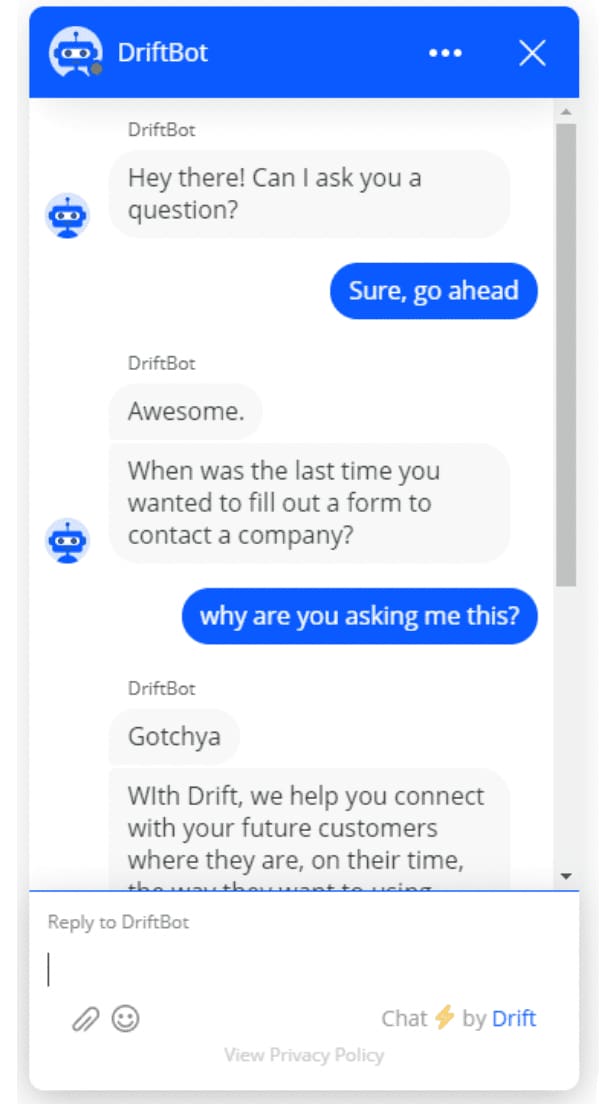

Everyone likes to talk about AI chatbots, but not many of them are backed by neural nets that allow the bot to actually learn and improve. Three that are: Siri, Alexa and Google Assistant. Most of the bots you talk to on the phone or in web chats aren’t AI. They’re just taking your input, matching it to some known phrases and giving you canned replies. There’s no learning going on.

This chatbot clearly hasn't been doing its homework.

... and it learns only if it has a lot of examples.

One thing that should be evident: There’s no AI without big data. Children take a year or so to begin to speak; they need that long to take in enough data to refine their neural pathways and build their speech centers. Computer neural nets (and virtually all other AI incarnations) can learn fast only if they have a lot of examples. If you want to teach the net to read X-rays to detect lung cancer, you show it many thousands of X-rays of healthy lungs and many thousands of cancerous lungs, and over time, it develops its own algorithm to decide what’s what.

Yep, you read that right: The computer comes up with its own algorithm to process the data, and it doesn’t typically tell us what that algorithm is. The computer will, for example, tell you where on an X-ray it thinks the cancer is, but it won’t necessarily tell you how it got to that conclusion. It’s pattern matching and probabilities, but the programmers don’t generally know how the algorithm actually works and they rarely gave the neural net any guidance. One reason it doesn’t tell you the precise algorithm is that the neural network is constantly changing and improving its algorithm, or “learning” which is what defines it as “artificial intelligence.” And that brings its own problems.

Yep, you read that right: The computer comes up with ITS OWN algorithm to process the data, and it doesn’t typically tell us what that algorithm is.

Most analytics today isn’t machine-learning-based. It’s just really complex programming that likely also uses probabilities and pattern matching of some sort, but the algorithm doesn’t self-improve, so again, there isn’t any learning going on. That doesn't mean it isn’t an incredibly powerful tool, but it’s not artificial intelligence.

It’s important to be precise here, for a few reasons. First, throwing around terms like “big data” and “AI” will make you seem smart to some people and not so smart to others -- probably not to the ones who matter. Second, machine learning is expensive to develop. In some cases, like speech-to-text or translation, these applications work great from the cloud and benefit from your use. Diversity of samples helps the system get better. Lots of users means true economy of scale. If you are tasked with buying or evaluating ML, you’ll want to know how it really works so that you understand what you’re buying.

Before you buy a technology that claims to make use of big data, AI or ML, make sure you understand how it works. Failing to do so can be a costly slip-up.

But beyond that, true AI is something that tech visionaries both respect and worry about. Elon Musk talks a lot (opens in new tab) about AI gone astray as an “existential threat” to humans. Even Alphabet’s CEO, Sundar Pichai, thinks government regulation (opens in new tab)will be required to prevent destructive use. AI facial recognition technology is a machine learning strong suit, but it threatens basic tenets of society and personal privacy. And AI is becoming good at deducing health risks down to the individual. What if an insurance company wants to reject you because an AI system says you have a 97% probability of getting an expensive disease?

Plus, by focusing so much on AI, we devalue the cool stuff that happens without AI. Robotic processes that aren’t AI-based -- but that do benefit from cheap, pervasive computing power -- don’t come with the same concerns about misuse. Robotic baseball umpires can call balls and strikes better than humans. The last thing you want is to throw AI into the mix and create a system with gray areas, where learning can equate to bias (opens in new tab). Just keep the game moving.

The bottom line

So, if you want to be nerd-cool at your next cocktail party and hear someone waxing eloquent about an AI system that isn’t AI, feel free to tell them they don’t know what they’re talking about. You’ll be doing society a favor, and trust me, everyone loves that person at the party. Your mileage may vary.

♂️ Art Wittmann is editor of Brainyard. He previously led content strategy across Informa USA tech brands, including Channel Partners, Channel Futures, Data Center Knowledge, Container World, Data Center World, IT Pro Today, IT Dev Connections, IoTi and IoT World Series Events, and was director of InformationWeek Reports and editor-in-chief of Network Computing. Got thoughts on this story? Drop Art a line.